The problem (from Larson’s Calculus, an Applied Approach, 10’th edition, Section 7.8, no. 18 in my paperback edition, no. 17 in the e-book edition) does not seem that unusual at a very quick glance:

if you have a hard time reading the image. AND, *if* you just blindly do the formal calculations:

which is what the text has as “the answer.”

But come on. We took a function that was negative in the first quadrant, integrated it entirely in the first quadrant (in standard order) and ended up with a positive number??? I don’t think so!

Indeed, if we perform which is far more believable.

So, we KNOW something is wrong. Now let’s attempt to sketch the region first:

Oops! Note: if we just used the quarter circle boundary we obtain

The 3-dimensional situation: we are limited by the graph of the function, the cylinder and the planes

; the plane

is outside of this cylinder. (from here: the red is the graph of

Now think about what the “formal calculation” really calculated and wonder if it was just a coincidence that we got the absolute value of the integral taken over the rectangle

This idea started as a bit of a joke:

Of course, for readers of this blog: easy-peasy. so the integral is transformed into

and so we’ve entered the realm of rational functions. Ok, ok, there is some work to do.

But for now, notice what is really doing on: we have a function under the radical that has an inverse function (IF we are careful about domains) and said inverse function has a derivative which is a rational function

More shortly: let be such that

then:

gets transformed:

and then

and the integral becomes

which is a rational function integral.

Yes, yes, we need to mind domains.

Ok, just for fun:

The usual is to use which transforms this to the dreaded

integral, which is a double integration by parts.

Is there a way out? I think so, though the price one pays is a trickier conversion back to x.

Let’s try so upon substituting we obtain

and noting that

alaways:

Now this can be integrated by parts: let

So but this easily reduces to:

Division by 2:

That was easy enough.

But we now have the conversion to x:

So far, so good. But what about ?

Write:

Now multiply both sides by to get

and use the quadratic formula to get

We need so

and that is our integral:

I guess that this isn’t that much easier after all.

I’ve discovered the channel “blackpenredpen” and it is delightful.

It is a nice escape into mathematics that, while far from research level, is “fun” and beyond mere fluff.

And that got me to thinking about . Yes, this can be done by residues

But I’ll look at this with Laplace Transforms.

We know that

But note that the antiderivative of with respect to

is

That might not seem like much help, but then notice

(assuming

So why not:

Now since the left hand side is just a double integral over the first quadrant (an infinite rectangle) the order of integration can be interchanged:

and that is equal to .

Note: is sometimes called the

function

\

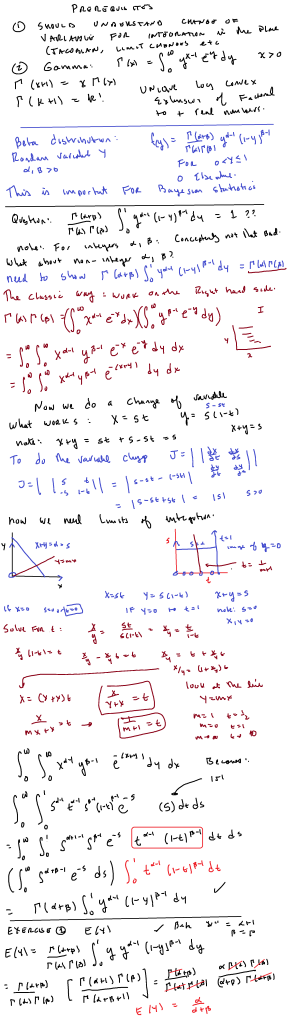

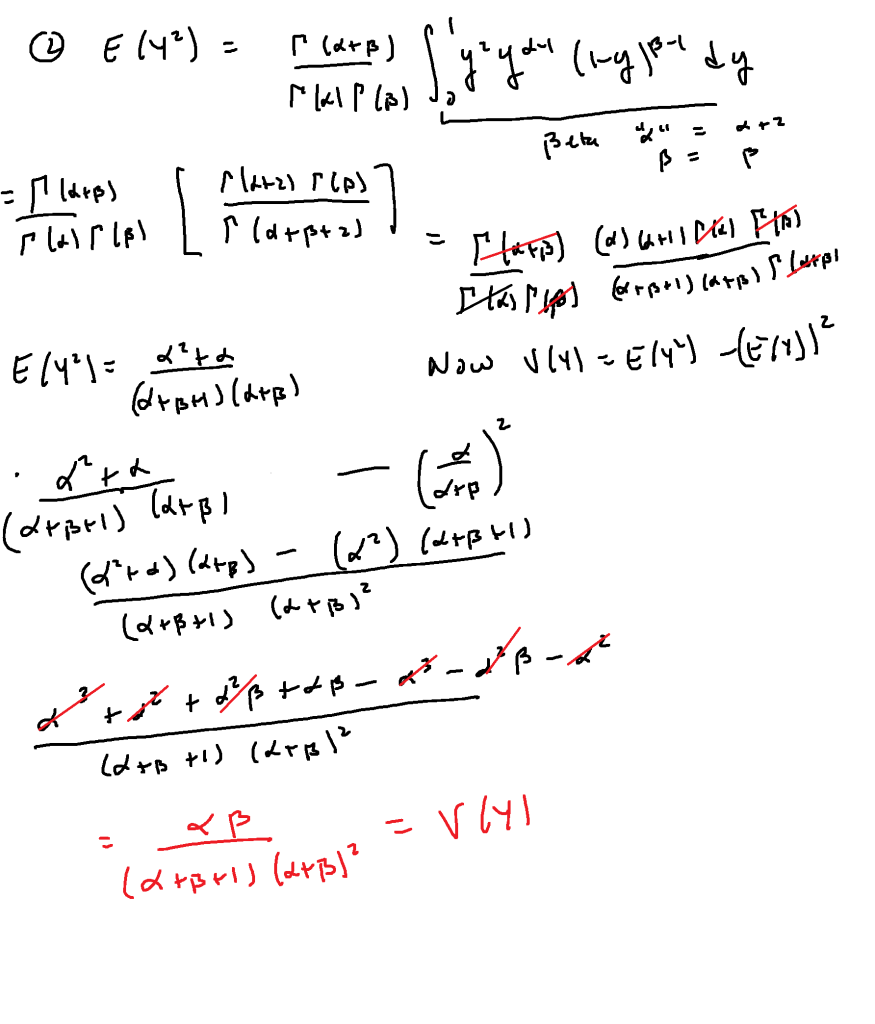

My interest in “beta” functions comes from their utility in Bayesian statistics. A nice 78 minute introduction to Bayesian statistics and how the beta distribution is used can be found here; you need to understand basic mathematical statistics concepts such as “joint density”, “marginal density”, “Bayes’ Rule” and “likelihood function” to follow the youtube lecture. To follow this post, one should know the standard “3 semesters” of calculus and know what the gamma function is (the extension of the factorial function to the real numbers); previous exposure to the standard “polar coordinates” proof that would be very helpful.

So, what it the beta function? it is where

. Note that

for integers

The gamma function is the unique “logarithmically convex” extension of the factorial function to the real line, where “logarithmically convex” means that the logarithm of the function is convex; that is, the second derivative of the log of the function is positive. Roughly speaking, this means that the function exhibits growth behavior similar to (or “greater”) than

Now it turns out that the beta density function is defined as follows: for

as one can see that the integral is either proper or a convergent improper integral for

.

I'll do this in two steps. Step one will convert the beta integral into an integral involving powers of sine and cosine. Step two will be to write as a product of two integrals, do a change of variables and convert to an improper integral on the first quadrant. Then I'll convert to polar coordinates to show that this integral is equal to

Step one: converting the beta integral to a sine/cosine integral. Limit and then do the substitution

. Then the beta integral becomes:

Step two: transforming the product of two gamma functions into a double integral and evaluating using polar coordinates.

Write

Now do the conversion to obtain:

(there is a tiny amount of algebra involved)

From which we now obtain

Now we switch to polar coordinates, remembering the that comes from evaluating the Jacobian of

This splits into two integrals:

The first of these integrals is just so now we have:

The second integral: we just use to obtain:

(yes, I cancelled the 2 with the 1/2)

And so the result follows.

That seems complicated for a simple little integral, doesn’t it?

I didn’t have the best day Thursday; I was very sick (felt as if I had been in a boxing match..chills, aches, etc.) but was good to go on Friday (no cough, etc.)

So I walk into my complex variables class seriously under prepared for the lesson but decide to tackle the integral

Of course, you know the easy way to do this, right?

and evaluate the latter integral as follows:

(this follows from restricting

to the unit circle

and setting

and then obtaining a rational function of

which has isolated poles inside (and off of) the unit circle and then using the residue theorem to evaluate.

So And then the integral is transformed to:

Now the denominator factors: which means

but only the roots

lie inside the unit circle.

Let

Write:

Now calculate: and

Adding we get so by Cauchy’s theorem

Ok…that is fine as far as it goes and correct. But what stumped me: suppose I did not evaluate and divide by two but instead just went with:

$latex where

is the upper half of

? Well,

has a primitive away from those poles so isn’t this just

, right?

So why not just integrate along the x-axis to obtain because the integrand is an odd function?

This drove me crazy. Until I realized…the poles….were…on…the…real…axis. ….my goodness, how stupid could I possibly be???

To the student who might not have followed my point: let be the upper half of the circle

taken in the standard direction and

if you do this property (hint: set

. Now attempt to integrate from 1 to -1 along the real axis. What goes wrong? What goes wrong is exactly what I missed in the above example.

This is based on a Mathematics Magazine article by Irving Katz: An Inequality of Orthogonal Complements found in Mathematics Magazine, Vol. 65, No. 4, October 1992 (258-259).

In finite dimensional inner product spaces, we often prove that My favorite way to do this: I introduce Grahm-Schmidt early and find an orthogonal basis for

and then extend it to an orthogonal basis for the whole space; the basis elements that are not basis elements are automatically the basis for

. Then one easily deduces that

(and that any vector can easily be broken into a projection onto

, etc.

But this sort of construction runs into difficulty when the space is infinite dimensional; one points out that the vector addition operation is defined only for the addition of a finite number of vectors. No, we don’t deal with Hilbert spaces in our first course. 🙂

So what is our example? I won’t belabor the details as they can make good exercises whose solution can be found in the paper I cited.

So here goes: let be the vector space of all polynomials. Let

the subspace of even polynomials (all terms have even degree),

the subspace of odd polynomials, and note that

Let the inner product be . Now it isn’t hard to see that

and

.

Now let denote the subspace of polynomials whose terms all have degree that are multiples of 4 (e. g.

and note that

.

To see the reverse inclusion, note that if ,

where

and then

for any

. So we see that it must be the case that

as well.

Now we can write: and therefore

for

Now I wish I had a more general proof of this. But these equations (for each leads a system of equations:

It turns out that the given square matrix is non-singular (see page 92, no. 3 of Polya and Szego: Problems and Theorems in Analysis, Vol. 2, 1976) and so the . This means

and so

Anyway, the conclusion leaves me cold a bit. It seems as if I should be able to prove: let be some, say…

function over

where

for all

then

. I haven’t found a proof as yet…perhaps it is false?

Ok, classes ended last week and my brain is way out of math shape. Right now I am contemplating how to show that the complements of this object

and of the complement of the object depicted in figure 3, are NOT homeomorphic.

I can do this in this very specific case; I am interested in seeing what happens if the “tangle pattern” is changed. Are the complements of these two related objects *always* topologically different? I am reasonably sure yes, but my brain is rebelling at doing the hard work to nail it down.

Anyhow, finals are graded and I am usually treated to one unusual student trick. Here is one for the semester:

Now I was hoping that they would say at which case the integral is translated to:

which is easy to do.

Now those wanting to do it a more difficult (but still sort of standard) way could do two repetitions of integration by parts with the first set up being and that works just fine.

But I did see this: (ok, there are some domain issues here but never mind that) and we end up with the transformed integral:

which can be transformed to

by elementary trig identities.

And yes, that leads to an answer of which, upon using the triangle

Gives you an answer that is exactly in the same form as the desired “rationalization substitution” answer. Yeah, I gave full credit despite the “domain issues” (in the original integral, it is possible for ).

What can I say?

I saw polar coordinate calculus for the first time in 1977. I’ve taught calculus as a TA and as a professor since 1987. And yet, I’ve never thought of this simple little fact.

Consider . Now it is well know that the area formula (area enclosed by a polar graph, assuming no “doubling”, self intersections, etc.) is

Now the leaved roses have the following types of graphs: leaves if

is odd, and

leaves if

is even (in the odd case, the graph doubles itself).

So here is the question: how much total area is covered by the graph (all the leaves put together, do NOT count “overlapping”)?

Well, for an integer, the answer is:

if

is odd, and

if

is even! That’s it! Want to know why?

Do the integral: if is odd, our total area is

. If

is even, we have the same integral but the outside coefficient is

which is the only difference. Aside from parity, the number of leaves does not matter as to the total area!

Now the fun starts when one considers a fractional multiple of and I might ponder that some.