Let’s start with an example from sports: basketball free throws. At a certain times in a game, a player is awarded a free throw, where the player stands 15 feet away from the basket and is allowed to shoot to make a basket, which is worth 1 point. In the NBA, a player will take 2 or 3 shots; the rules are slightly different for college basketball.

Each player will have a “free throw percentage” which is the number of made shots divided by the number of attempts. For NBA players, the league average is .672 with a variance of .0074.

Now suppose you want to determine how well a player will do, given, say, a sample of the player’s data? Under classical (aka “frequentist” ) statistics, one looks at how well the player has done, calculates the percentage () and then determines a confidence interval for said

: using the normal approximation to the binomial distribution, this works out to

\

Yes, I know..for someone who has played a long time, one has career statistics ..so imagine one is trying to extrapolate for a new player with limited data.

That seems straightforward enough. But what if one samples the player’s shooting during an unusually good or unusually bad streak? Example: former NBA star Larry Bird once made 71 straight free throws…if that were the sample, with variance zero! Needless to say that trend is highly unlikely to continue.

Classical frequentist statistics doesn’t offer a way out but Bayesian Statistics does.

This is a good introduction:

But here is a simple, “rough and ready” introduction. Bayesian statistics uses not only the observed sample, but a proposed distribution for the parameter of interest (in this case, p, the probability of making a free throw). The proposed distribution is called a prior distribution or just prior. That is often labeled

Since we are dealing with what amounts to 71 Bernoulli trials where p = .672 so the distribution of each random variable describing the outcome of each individual shot has probability mass fuction where

for a make and

for a miss.

Our goal is to calculate what is known as a posterior distribution (or just posterior) which describes after updating with the data; we’ll call that

.

How we go about it: use the principles of joint distributions, likelihood functions and marginal distributions to calculate

The denominator “integrates out” p to turn that into a marginal; remember that the are set to the observed values. In our case, all are 1 with

.

What works well is to use the beta distribution for the prior. Note: the pdf is and if one uses

, this works very well. Now because the mean will be

and

given the required mean and variance, one can work out

algebraically.

Now look at the numerator which consists of the product of a likelihood function and a density function: up to constant , if we set

we get

The denominator: same thing, but gets integrated out and the constant

cancels; basically the denominator is what makes the fraction into a density function.

So, in effect, we have which is just a beta distribution with new

.

So, I will spare you the calculation except to say that that the NBA prior with leads to

Now the update: .

What does this look like? (I used this calculator)

That is the prior. Now for the posterior:

Yes, shifted to the right..very narrow as well. The information has changed..but we avoid the absurd contention that with a confidence interval of zero width.

We can now calculate a “credible interval” of, say, 90 percent, to see where most likely lies: use the cumulative density function to find this out:

And note that . In fact, Bird’s lifetime free throw shooting percentage is .882, which is well within this 91.6 percent credible interval, based on sampling from this one freakish streak.

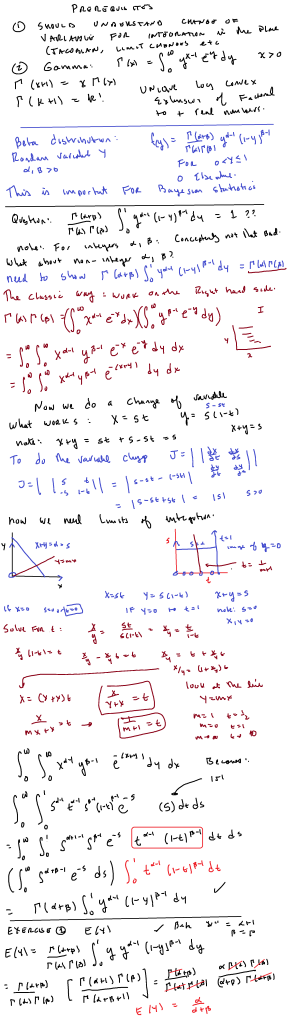

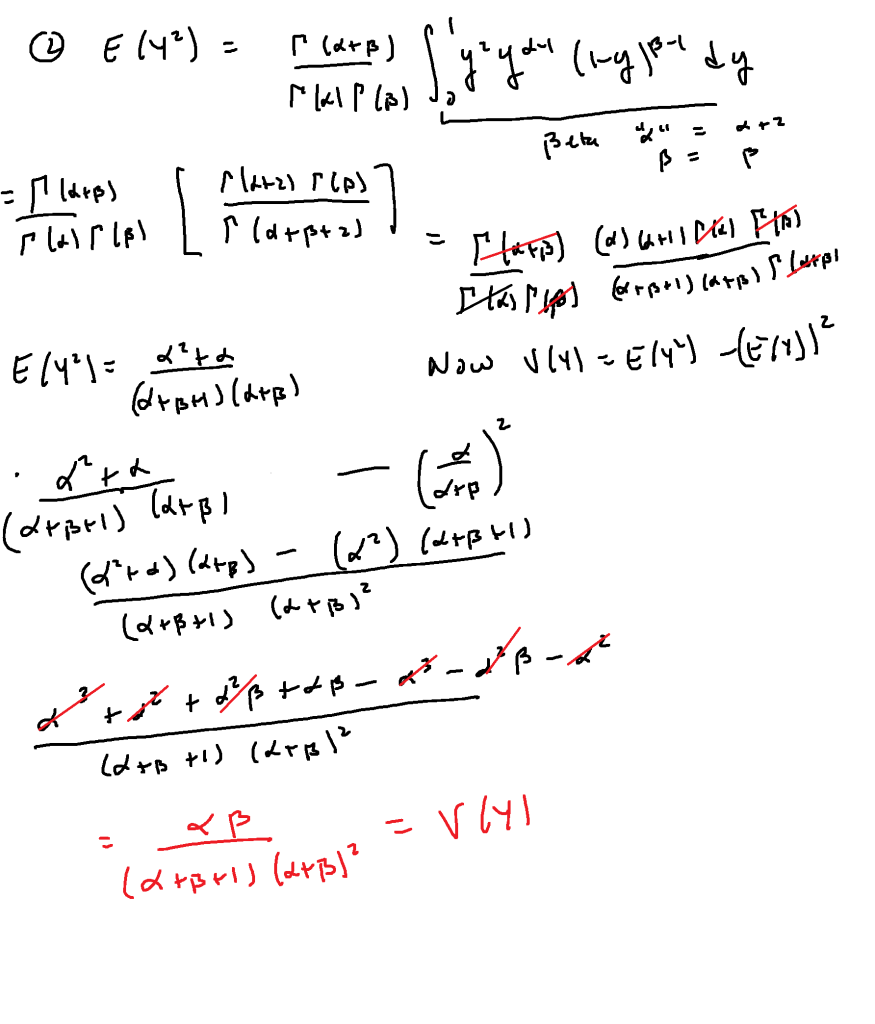

My interest in “beta” functions comes from their utility in Bayesian statistics. A nice 78 minute introduction to Bayesian statistics and how the beta distribution is used can be found here; you need to understand basic mathematical statistics concepts such as “joint density”, “marginal density”, “Bayes’ Rule” and “likelihood function” to follow the youtube lecture. To follow this post, one should know the standard “3 semesters” of calculus and know what the gamma function is (the extension of the factorial function to the real numbers); previous exposure to the standard “polar coordinates” proof that would be very helpful.

So, what it the beta function? it is where

. Note that

for integers

The gamma function is the unique “logarithmically convex” extension of the factorial function to the real line, where “logarithmically convex” means that the logarithm of the function is convex; that is, the second derivative of the log of the function is positive. Roughly speaking, this means that the function exhibits growth behavior similar to (or “greater”) than

Now it turns out that the beta density function is defined as follows: for

as one can see that the integral is either proper or a convergent improper integral for

.

I'll do this in two steps. Step one will convert the beta integral into an integral involving powers of sine and cosine. Step two will be to write as a product of two integrals, do a change of variables and convert to an improper integral on the first quadrant. Then I'll convert to polar coordinates to show that this integral is equal to

Step one: converting the beta integral to a sine/cosine integral. Limit and then do the substitution

. Then the beta integral becomes:

Step two: transforming the product of two gamma functions into a double integral and evaluating using polar coordinates.

Write

Now do the conversion to obtain:

(there is a tiny amount of algebra involved)

From which we now obtain

Now we switch to polar coordinates, remembering the that comes from evaluating the Jacobian of

This splits into two integrals:

The first of these integrals is just so now we have:

The second integral: we just use to obtain:

(yes, I cancelled the 2 with the 1/2)

And so the result follows.

That seems complicated for a simple little integral, doesn’t it?